Abstract

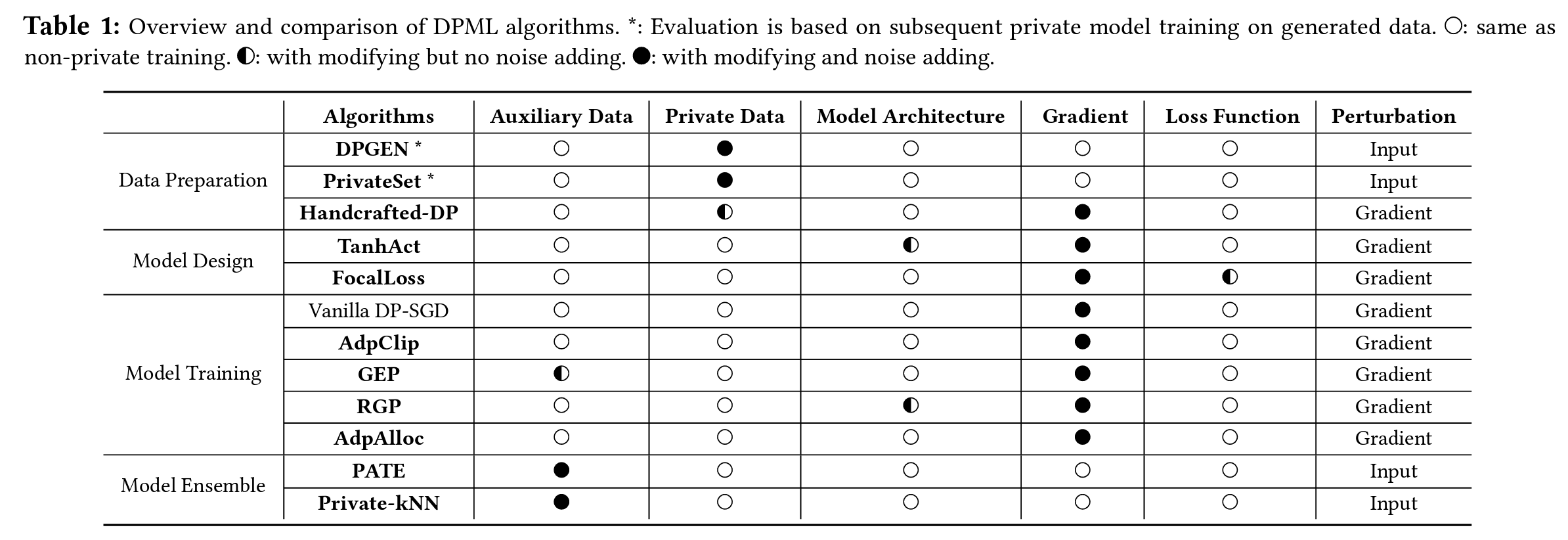

Differential privacy (DP), as a rigorous mathematical definition quantifying privacy leakage, has become a well-accepted standard for privacy protection. Combined with powerful machine learning techniques, differentially private machine learning (DPML) is increasingly important. As the most classic DPML algorithms, DP-SGD incurs a significant loss of utility, which hinders DPML's deployment in practice. Many studies have recently proposed improved algorithms based on DP-SGD to mitigate utility loss. However, these studies are isolated and cannot comprehensively measure the performance of improvements proposed in algorithms. More importantly, there is a lack of comprehensive research to compare improvements in these DPML algorithms across utility, defensive capabilities, and generalizability. We fill this gap by performing a holistic measurement of improved DPML algorithms on utility and defense capability against membership inference attacks. We first present a taxonomy of where improvements are located in the machine learning life cycle. Based on taxonomy, we jointly perform an extensive measurement study of the improved DPML algorithms, over 12 algorithms, four model architectures, four datasets, two attacks, and various privacy budget configurations. We also cover state-of-the-art label differential privacy (Label DP) algorithms in the evaluation. According to empirical results, DP can effectively defend against membership inference attacks, and sensitivity-bounding techniques such as per-sample gradient clipping play an important role in defense. We also explore some improvements that can maintain model utility and defend membership inference attacks more effectively. Experiments show that Label DP algorithms achieve less utility loss but are fragile to membership inference attacks. Machine learning practitioners may benefit from these evaluations to select appropriate algorithms. To support our evaluation, we implement a modular re-usable software, DPMLBench, which enables sensitive data owners to deploy DPML algorithms and serves as a benchmark tool for researchers and practitioners.

Citation

@inproceedings{WZZCMLFC23,

author = {Chengkun Wei and Minghu Zhao and Zhikun Zhang and Min Chen and Wenlong Meng and Bo Liu and Yuan Fan and Wenzhi Chen},

title = {{DPMLBench: Holistic Evaluation of Differentially Private Machine Learning}},

booktitle = {{ACM CCS}},

publisher = {},

year = {2023},

}